Home / IT & Computer Science / AI & Robotics / Building a Future with Robots / What makes a robot a robot?

Reach your personal and professional goals

Unlock access to hundreds of expert online courses and degrees from top universities and educators to gain accredited qualifications and professional CV-building certificates.

Join over 18 million learners to launch, switch or build upon your career, all at your own pace, across a wide range of topic areas.

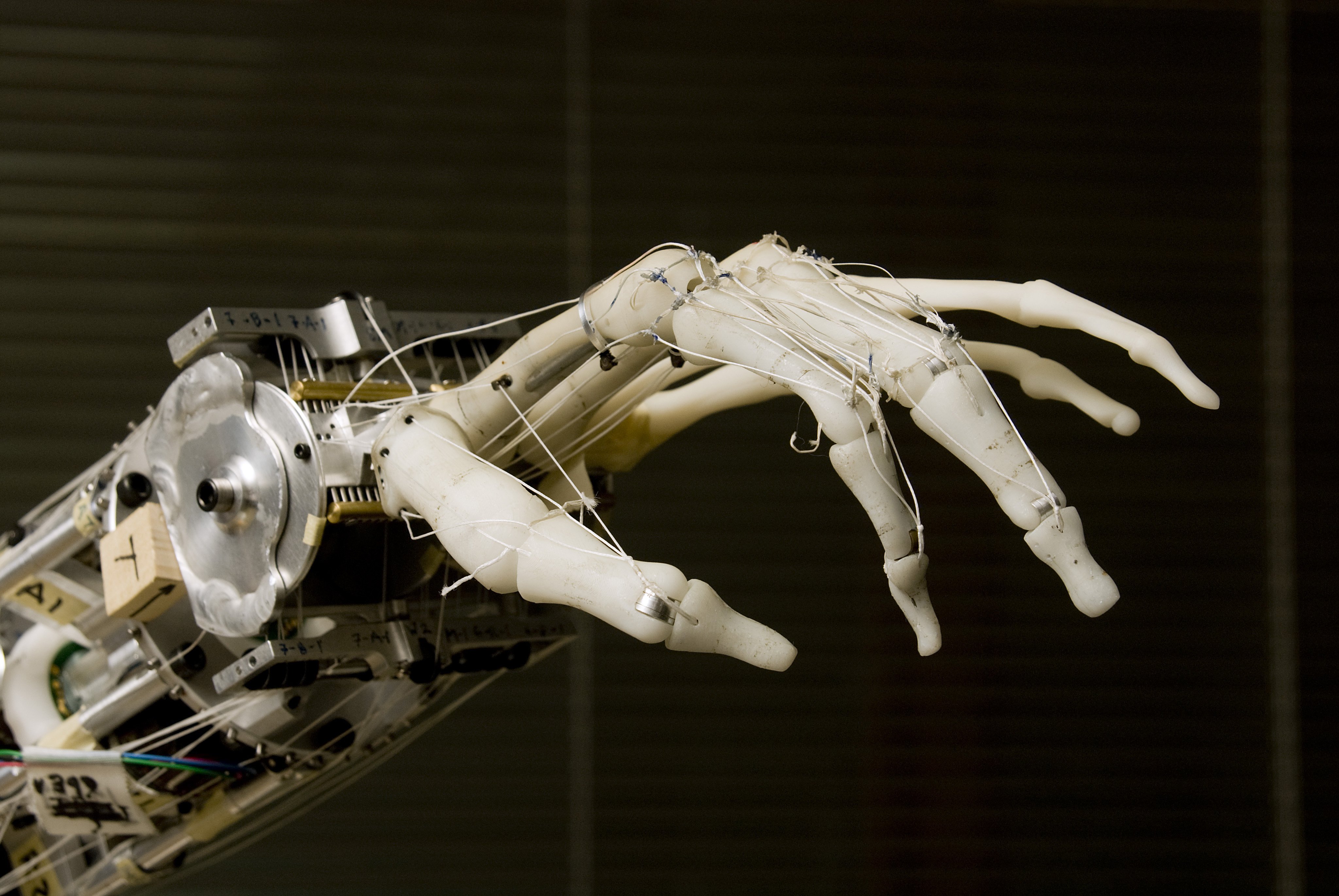

A robot or autonomous system consists of the entity itself and its interaction with the world including other RAS and humans. The key elements of any RAS are the ability to sense and perceive the world, make intelligent decisions and take appropriate actions.

A robot or autonomous system consists of the entity itself and its interaction with the world including other RAS and humans. The key elements of any RAS are the ability to sense and perceive the world, make intelligent decisions and take appropriate actions. A driverless car with labelled sensors. Photo: John Greenfield

A driverless car with labelled sensors. Photo: John Greenfield