Introduction to implementation science

Share this step

In this step, we will highlight why implementing interventions is a challenge and how implementation science can help you to plan and deliver your intervention effectively wherever you are.

Problems with interventions

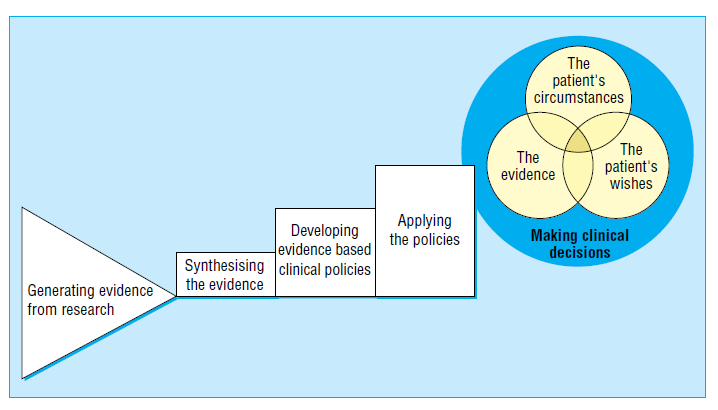

Right now, interventions are being developed, implemented, and evaluated (some) to help improve and advance healthcare across the globe, including novel enhancements to antimicrobial stewardship programmes and infection prevention and control. However, it has been reported that evidence-based practices can take an average of 17 years to be incorporated into routine clinical practice, as seen in figure 1.

Figure 1 – The path from the generation of evidence to the application of evidence from Haynes B, Haines A, BMJ 1998;317:273-6

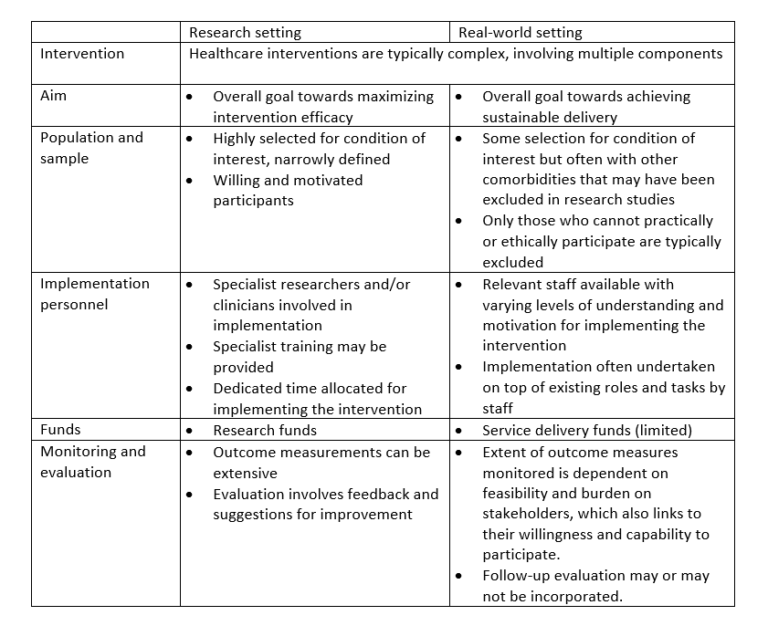

In other words, there is a critical gap between research and practice. So why is this? Table 1 highlights a number of key differences between implementing interventions in trial settings compared with real-world clinical practice settings. You can see that while the intended intervention to be implemented is the same, the structures, processes and outcomes of implementation in a research setting versus a real-world setting can be very different.

Table 1 – Comparing research settings to real-world settings

Complexity of implementing and evaluating interventions in healthcare

An underlying challenge with implementing and evaluating interventions in healthcare is that these are typically complex, by this we mean:

- The intervention has a number of separate components or elements

- Combinations of components are necessary for the intervention to work but the type of combinations may differ in different settings

- The components may act independently or interdependently

- The intervention often has behavioural elements

- There is some uncertainty regarding the ‘active ingredients’ (i.e. what is driving the effect?)

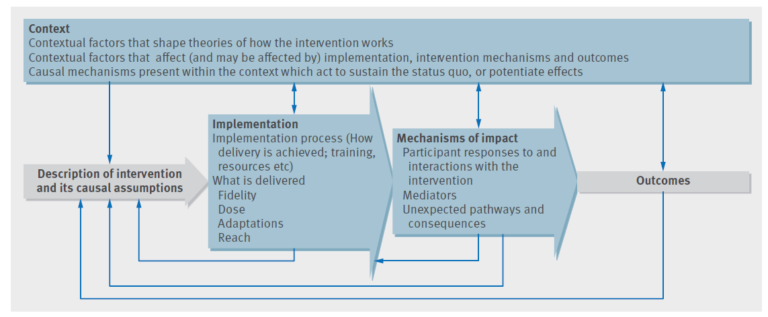

In recognising that process evaluation is an essential part of designing and testing complex interventions, the Medical Research Council (UK) published a framework to support researchers and clinicians to undertake this work more effectively.

Briefly, the framework highlights the relationships between implementation, mechanisms, and context (figure 2). For example, implementation of an intervention will be affected by the context (setting) which in turn may also change aspects of the context to affect how it will be delivered. Thus, it is important for us as researchers and clinicians to plan and consider implementation of any intervention carefully, as well as design evaluation to monitor for both intended and unintended consequences as much as possible. This is where implementation science can offer some useful approaches for anyone who is interested in translating research into practice.

Figure 2 – Key functions of process evaluation and relations among them (blue boxes are the key components of a process evaluation. Investigation of these components is shaped by a clear intervention description and informs interpretation of outcomes) from Moore et al, BMJ 2015;350:h1258

Click here to take a closer look

What is implementation science?

Implementation science has been defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and hence, to improve the quality and effectiveness of health services”. Implementation science therefore investigates how an intervention works, for whom, under what circumstances, why, and explores how interventions can be adapted and scaled up in ways that make it accessible. As such, implementation research requires interdisciplinary collaboration among stakeholders, such as health services researchers, front-line clinicians, patients, anthropologists, health psychologists, sociologists, organisational scientists, organisational administrators and managers.

We have already mentioned that healthcare interventions are complex, and one common assumption about implementation is that the intervention needs to be delivered in the same way wherever we try to implement it and that the intervention must be transferable. However, the two concepts are not the same. Implementation fidelity is the degree to which an intervention is delivered as intended, whilst transferability of the intervention is the degree to which an intervention can be adapted for delivery in different contexts.

Implementation fidelity can be divided into five areas:

- Adherence: intervention delivered as designed/written

- Exposure (dose): how much of the intervention was received (i.e. frequency, duration, coverage rate)

- Quality of delivery: manner in which intervention is delivered

- Participant responsiveness: reactions of participants or recipients

- Programme differentiation: identifying which elements of the intervention are actually essential (‘active ingredients’)

Due to the complexity of healthcare interventions and the diversity of real-world settings, it is not necessary or appropriate to require or expect high implementation fidelity. Within implementation science, it is recognised that a balance is required between intervention fidelity and intervention adaptability. Some components of the intervention may be required in one setting whilst another set of components may be needed in another.

Theories and frameworks in implementation research

Despite the two terms being often used interchangeably, a theory is quite different to a framework. Here, the term ‘theory’ describes any proposal about meaningful relationships between constructs (variables), or how the context may change the mechanism or behaviour of another context or outcome. A framework is a set of constructs that is organised in a way that provides a descriptive narrative of the constructs, without implying causal relationships.

There are a range of theories and frameworks that have been used in implementation science research and you can find out more about these in the see also section below. One of these is the Consolidated Framework for Implementation Research (CFIR), which has been used in a number of antimicrobial stewardship related studies, and this will be described in more detail in step 3.4.

Share this

Tackling Antimicrobial Resistance: A Social Science Approach

Tackling Antimicrobial Resistance: A Social Science Approach

Reach your personal and professional goals

Unlock access to hundreds of expert online courses and degrees from top universities and educators to gain accredited qualifications and professional CV-building certificates.

Join over 18 million learners to launch, switch or build upon your career, all at your own pace, across a wide range of topic areas.

Register to receive updates

-

Create an account to receive our newsletter, course recommendations and promotions.

Register for free