Home / Healthcare & Medicine / Medical Technology / Statistical Shape Modelling: Computing the Human Anatomy / What is Principal Component Analysis (PCA)?

This article is from the free online

Statistical Shape Modelling: Computing the Human Anatomy

Reach your personal and professional goals

Unlock access to hundreds of expert online courses and degrees from top universities and educators to gain accredited qualifications and professional CV-building certificates.

Join over 18 million learners to launch, switch or build upon your career, all at your own pace, across a wide range of topic areas.

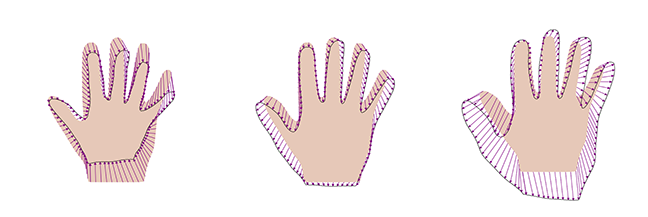

Figure 1: the shape variation represented by the first principal component of the hand model, where the hand on the left shows a deformation with (hat{alpha}_1=-3), the middle hand shows the mean deformation ((hat{alpha}_1=0)) and the hand on the right the deformation with (hat{alpha}_1=3).

Figure 1: the shape variation represented by the first principal component of the hand model, where the hand on the left shows a deformation with (hat{alpha}_1=-3), the middle hand shows the mean deformation ((hat{alpha}_1=0)) and the hand on the right the deformation with (hat{alpha}_1=3).

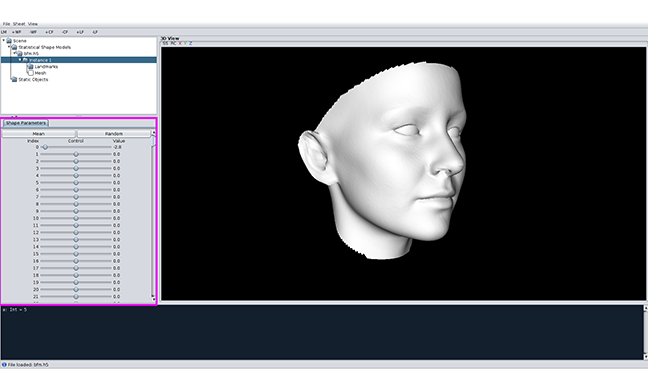

Figure 2: visualizing shape variations in Scalismo Lab

Figure 2: visualizing shape variations in Scalismo Lab