A Birds Eye View On Crops

Share this step

Drones – more than toys for boys and girls.

An article by Yasmin Van Brabant, Stien Hermans, Irene Borra Serrano, Wouter Saeys and Ben Somers, from KU Leuven.

I’m pretty sure that many amongst you already flew around with a drone – a remotely piloted model aircraft equipped with a camera – that you can buy in your local toy store. Yet, drones are also used in a professional context, for example for obtaining information about the state of crops in the field. These more professional drone systems, also called Unmanned Aerial Vehicles (UAV) or Remotely Piloted Aircraft Systems (RPAS), come in all shapes and sizes. They are equipped with an autopilot system, a GPS, a compass, and a barometric altimeter allowing the drone to fly fully autonomously. These drones can carry different instruments, such as a video camera or a photographic camera, or thermal and infrared sensors. The drone images (see Figure 1) not only provide a bird’s-eye view of the farm. The collected information also allows a detailed characterization of the condition of individual crops. Moreover, as opposed to more traditional remote sensing platforms, such as satellites and (manned) aircrafts, drones can provide images with a much higher spatial level of detail; and allow a greater flexibility in terms of when to fly. Put together, it should not come as a surprise that drone technology provides interesting opportunities for precision farming. In the remainder of this article we will walk you through some of the possibilities of drone-based crop monitoring.

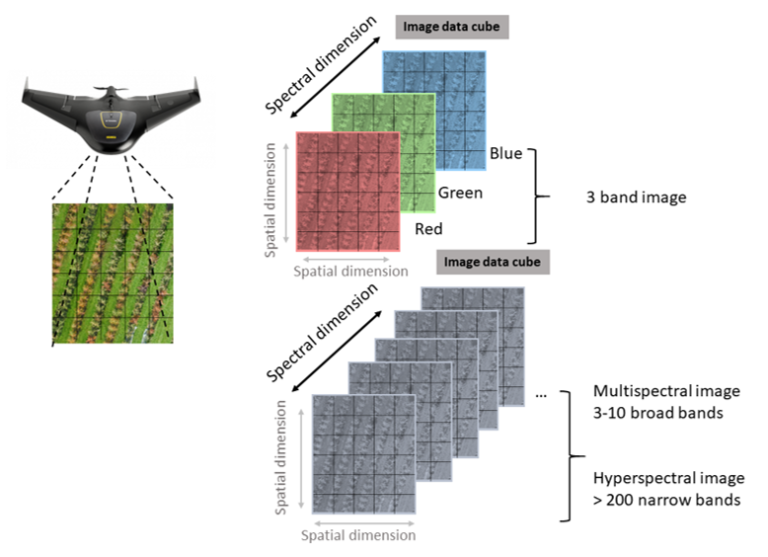

Figure 1 – A raster image cube

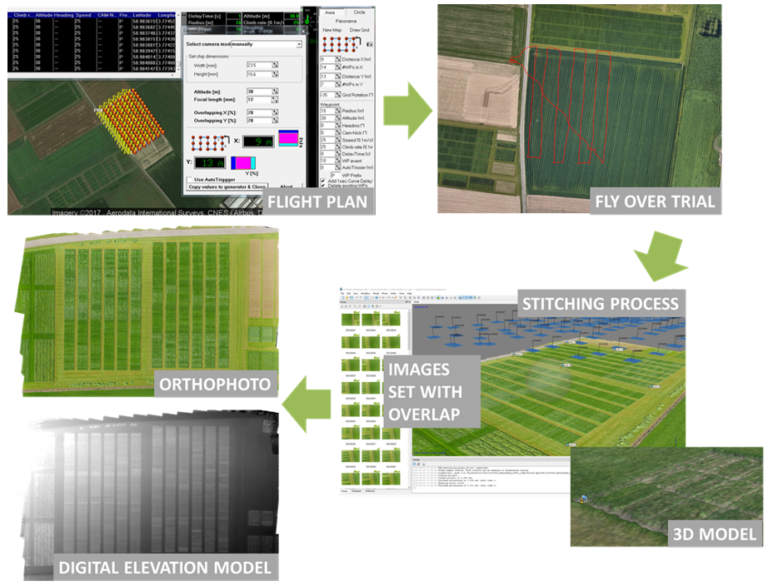

Drones typically provide image data in raster format. A raster consists of a matrix of cells (or pixels) organized into rows and columns where each cell contains a value. In the case of images, these values correspond to the amount of light in a certain waveband (color) that is reflected by objects on the ground. For each waveband a separate raster layer is created. In case of a simple RGB photographic camera sensitive for the red, green and blue light (like the one in your smartphone) the final raster cube exists of three layers, one for each color or wavelength. Unlike the pictures you take for your amusement, the imagery for research should be scientifically valid. This means that the images need to undergo some “preprocessing” steps before they can be used for further analysis. While flying, the drone takes a lot of overlapping images of the agricultural field which are be stitched together into one large image tile (= mosaicking). The resulting image tile is then linked to the correct location on Earth (= georeferencing). Finally, potential artifacts related to the characteristics of the sensor; the atmospheric conditions; the position of the sun at the time of acquisition; and the local topography are removed (= radiometric correction).

Monitoring crop biomass

As farming is all about the production of (edible) biomass, monitoring the development of the crops is important for farmers to take management decisions. Drone images are very well suited for this type of application. One of the simplest approaches is to use drones to monitor the ground coverage of (individual) crops throughout the growing season. We can train computer algorithms to recognize the difference in appearance between crop pixels and non-crop pixels (e.g. shade, bare soil and weeds), so they can learn to automatically extract all the crop pixels from drone images. Based on the classification (crop or non-crop) of the pixels, maps can be derived where areas with lower crop coverage can be detected and decisions can be made for remediation (e.g. fertilizer or herbicide application).

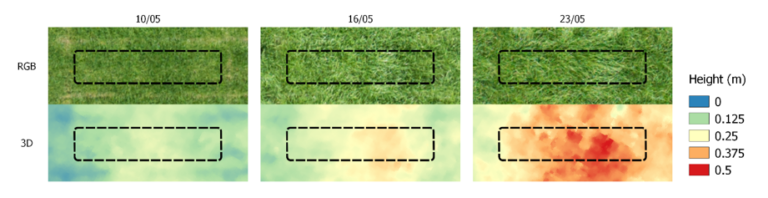

More advanced image processing allows to estimate the height of individual plants and to use the height data to estimate biomass. Therefore, the 2D drone images are converted into a 3D representation of crops (see Figure 3). From this 3D representation it becomes possible to extract structural information such as canopy height and volume as indicators for the amount of biomass. Figure 2 below illustrates how such 3D representations derived from drone images allow to monitor the growth of perennial ryegrass, which can be found on pasture lands.

Figure 2 – How 3D reprenestations derived from drone images allow us to monitor growth of perennial ryegrass

Figure 3 – From 2D images to a 3D model of crops

When drone images are collected, they are often collected as a series of overlapping images as can be seen from the illustration below

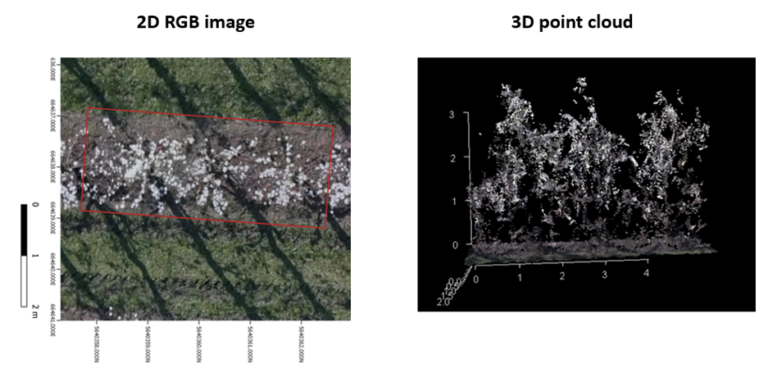

The main reason for collecting overlapping drone images is to allow the development of a 3D model that also includes height information. A commonly applied procedure to achieve this is Structure from Motion (SfM). This approach is based on the theory of stereo vision, mimicking the way human vision allows the perception of depth. The basic principle is that you observe the same object from different observation positions/angles (i.e. different position of both eyes in human vision vs different viewing angles in case of overlapping drone images).

Below you can see an illustration of the SfM approach applied on drone images acquired over a pear orchard in Belgium (Figure 4). The 3D structure of individual branches and leaves becomes apparent.

Figure 4 – 2D RGB image and 3D Point Image

Counting Flowers and Fruits

With drone images, we can estimate the number of fruits in apple or pear orchards well before their actual harvest dates. This allows the fruit farmers to efficiently organize the harvest and storage of their fruits. In spring, we can already get a first idea about the potential harvest by counting the number of flower clusters. As it would take a long time to do this counting manually, the farmers prefer a more automated approach. In the left pane of figure 5 below, you can see that on a drone image, the white flowers of pear trees are quite distinct from their surroundings. This means that they can easily be distinguished with the use of simple computer classification algorithms. In the middle pane, these flower pixels have been highlighted in blue. As multiple pixels correspond to the same flower, pixels close to each other are grouped, as shown as in the right pane.

Figure 5: Counting flowers and fruits

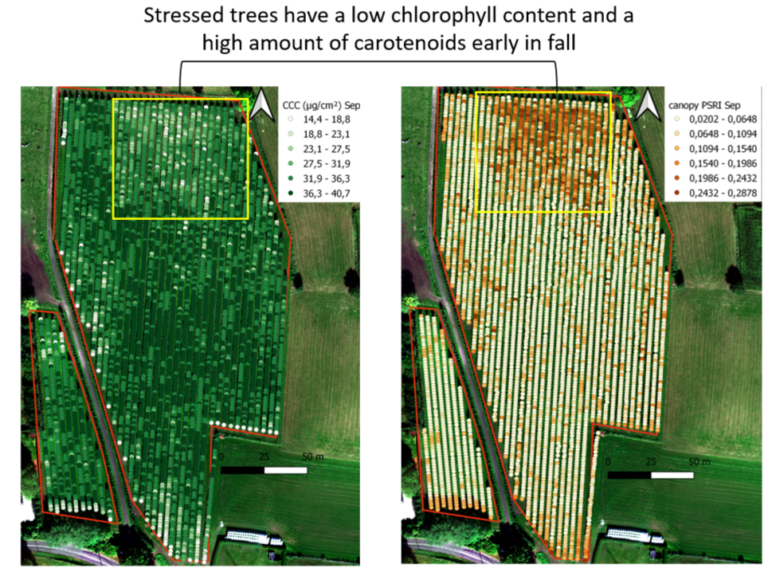

Crop vitality assessment

For farmers, it is really important to closely monitor the health and vitality of their crops, as this allows them to quickly respond to stressors like droughts, plagues and diseases. Drone cameras can capture the world in those colors that we can see with our human eyes (visible wavelengths), but they can also capture ‘colors’ that are invisible to us, like infrared light. When plants encounter stress, the chemical composition of their leaves as well as their internal structure are altered. Changes in a plant’s chemical composition, especially leaf pigments like chlorophyll and carotenoids, mainly have an impact on the visible wavelengths, whereas changes in the tissue structure mainly influence the invisible infrared wavelengths. Therefore, the ratio of infrared to visible light can give us a good indication of the plant’s vitality or health. Several plant health indicators, called ‘vegetation indices’, have been developed and used for the early detection of stress in crops. The figure below displays two of these vegetation indices related to leaf pigments for an orchard. Based on the vegetation index values the stressed plants in the top part of the parcel can clearly be distinguished from the healthy plants in the bottom part.

Figure 6: Crop vitality assessment

Conclusion

As illustrated with the different concrete examples above, drones can be a very useful source of information for farmers. Drones can help them portray the within-field variability in crop productivity and vitality; even up to the level of the individual plant. As such, they can inform farmers on where, when and how to (re)act in order to optimize their harvest. Moreover, they can provide production estimates early in the growing season, allowing farmers to plan ahead in terms of management, harvest and storage capacity.

For the interested readers we happily refer to some key literature that expands on current drone applications in agriculture. Please the the References document in the Downloads section below.

Share this

Revolutionising the Food Chain with Technology

Revolutionising the Food Chain with Technology

Reach your personal and professional goals

Unlock access to hundreds of expert online courses and degrees from top universities and educators to gain accredited qualifications and professional CV-building certificates.

Join over 18 million learners to launch, switch or build upon your career, all at your own pace, across a wide range of topic areas.

Register to receive updates

-

Create an account to receive our newsletter, course recommendations and promotions.

Register for free