Home / Healthcare & Medicine / Medical Technology / Statistical Shape Modelling: Computing the Human Anatomy / Posterior models for different kernels

This article is from the free online

Statistical Shape Modelling: Computing the Human Anatomy

Reach your personal and professional goals

Unlock access to hundreds of expert online courses and degrees from top universities and educators to gain accredited qualifications and professional CV-building certificates.

Join over 18 million learners to launch, switch or build upon your career, all at your own pace, across a wide range of topic areas.

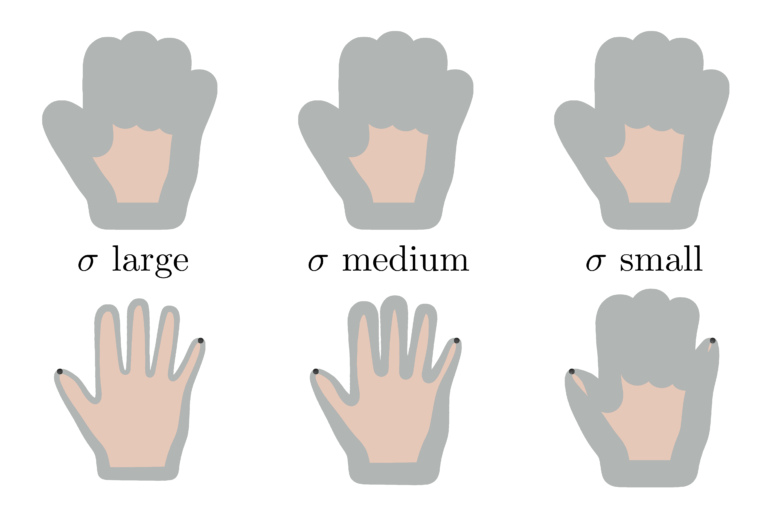

Figure 1: confidence region of a Gaussian kernel with (sigma) large (left) medium (middle) and small (right). The upper row shows the prior and the bottom row the posterior after the points have been constrained using Gaussian Process regression.

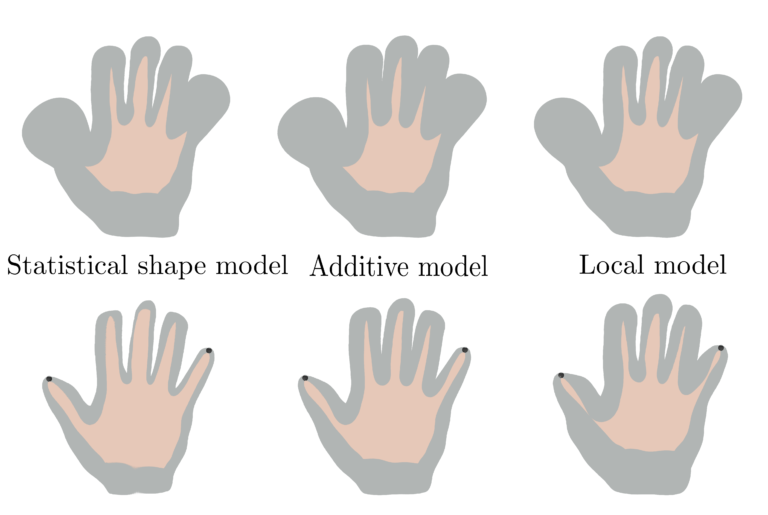

Figure 1: confidence region of a Gaussian kernel with (sigma) large (left) medium (middle) and small (right). The upper row shows the prior and the bottom row the posterior after the points have been constrained using Gaussian Process regression.  Figure 2: confidence region of a statistical shape model (left) a statistical shape model with an additive Gaussian model (middle) and a localised statistical model (right). The upper row shows the prior and the bottom row of the posterior after the point has been constrained using Gaussian Process regression.

Figure 2: confidence region of a statistical shape model (left) a statistical shape model with an additive Gaussian model (middle) and a localised statistical model (right). The upper row shows the prior and the bottom row of the posterior after the point has been constrained using Gaussian Process regression.